In the second part, we are going to set up the shader part of the Entity Influence system. In this system, we will pre-compute some of the required parameters so that the vertex shader can later read them and use the appropriate motion equation to calculate the angle.

Since we might have different grass that react differently based on grass-specific parameters, the design philosophy is to separate entity influence and grass - we do not want to see any grass-specific constants in the entity influence system.

NOTE: This part expects you to know how Compute Shaders work, and some knowledge of the render pipeline you are working with. You should at least know how to create a

CustomPassorScriptableRenderPassin your render pipeline. We will work in HDRP and useCustomPasshere. I will try my best to abstract away the render pipeline specific features (which also future-proofs API changes). Feel free to make necessary adjustments according to the API you are given.

Overview of the Entity Influence System

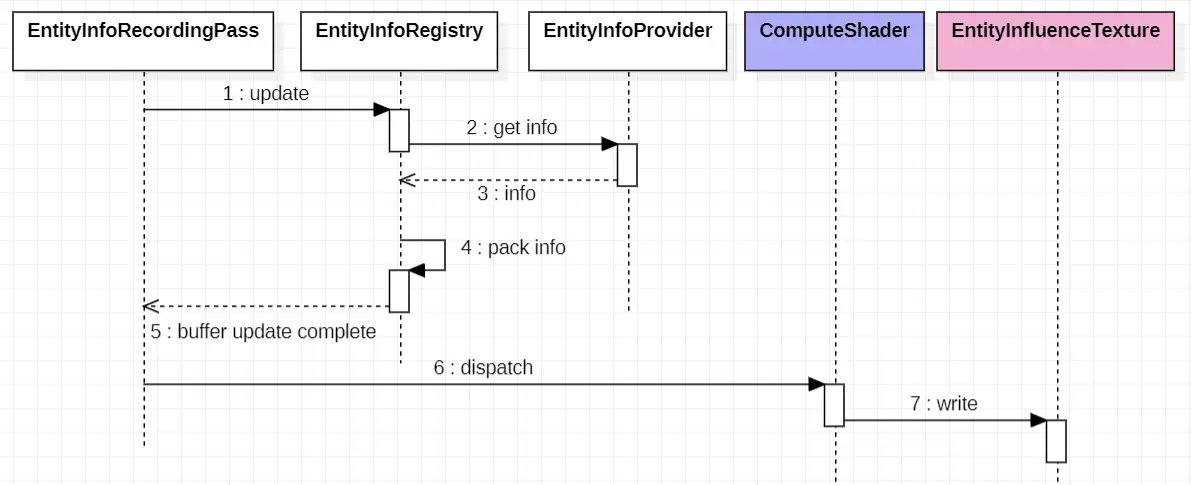

Each entity will have an EntityInfoProvider attached to them. The script will inform EntityInfoRegistry about the current position, radius, height, etc. of the entity.

Each frame, EntityInfoRegistry packs these infos into a GraphicsBuffer. A pass will bind this buffer to a compute shader as well as other globally tweakable parameters, and dispatch the compute shader. The compute shader will calculate the influence of each entity, and write the results to Entity Influence Texture(s), which will later be used in vertex shaders.

We are using textures because we have multiple entities that may influence grass. Sampling texture is going to be better than iterating through each entity in vertex shader. If you only have a very limited amount of entities, then it is possible to pass the buffer directly to the vertex shader and do all the calculations there - at the cost of vertex shader performance.

We defer part of the calculation to a compute shader because that provides us a way to harness the power of Async Compute. If we were to do everything in grass’ vertex shader, then everything will be done on Graphics queue. Also, even if we don’t use async compute, as the compute shader really doesn’t depend on anything, the driver can schedule it more effectively. The drawback is that we need texture to store intermediate results, therefore it isn’t an obvious gain, but a memory-speed trade-off.

Inputs and Outputs

Let’s review the theories, and determine what data do we need to establish the systems.

\[\theta_0(d) = \frac{\pi}{2}-arctan(\frac{2h_0d}{R_0^2-d^2})\]For initial angle calculation, we need the constant \(c\) and distance \(d\). For \(c\), we need the height \(h_0\) and radius \(R_0\) of the instigator, which are simply 2 tweakable floats.

As for \(d\), by definition, is the distance between the center of the instigator (therefore, instigator’s transform position), and the root of the grass. Now, the root of the grass may seem like a grass-specific data, but we could simply convert pixel position to world position. Though if we do so, the resolution of the texture will determine the accuracy of our simulation.

As for the motion equation, we take the underdamped case as an example:

\[\theta(t)=\theta_0*e^{-\gamma t}cos(\omega t)\]With:

- \(\gamma=\frac{b}{2*l_0}\)

- \(\omega_0 = \sqrt{\frac{g-k}{l_0}}\)

- \(\omega = \sqrt{abs(\gamma^2-\omega_0^2)}\)

We need grass length \(l_0\), elasticity constant \(k\), damping coefficient \(b\), and elapsed time \(t\). The length, elasticity constant, and damping coefficient are all grass-specific, so we only need to store elapsed time. The definition of elapsed time, in our case, is the time when grass leaves the influence of an entity. There are 2 ways to store this elapsed time:

- Store the timestamp

twhen the grass is last in an entity’s influence, then in the vertex shader, we can calculate the elapsed time with_Time.y - t. - Store the elapsed time

dtdirectly. Each frame we increase the elapsed timedtbyunity_DeltaTime.x, and setdt = 0if the grass is in any entity’s influence.

To avoid potential overflow, we will adopt the second approach.

Finally, calculating the angle is not enough. We still need to know in which direction should the grass bend. That means we need a Vector2 to represent the direction. We also need the entity’s bottom surface elevation, because if an entity is above the grass, then it should exert no influence on the grass. (remember the height of the grass is only available in vertex shader, so we can’t pre-calculate it!)

To summarize, this will be the info that we must produce in our Entity Influence System:

- Direction of the influence (

Vector2, or 2floats) - Elevation of the bottom surface of the entity (

float) - Initial angle (

float) - Elapsed time (

float)

“Blast! That’s 5 floats in total! That means we need 2 textures to fit them all in!” - Very perceptive! But here’s a trick we can do… see, when we need the direction, we do not need the magnitude of the Vector2. Later in the vertex shader, we would still call normalize on it. That means the magnitude of the vector can store another float… let it be the initial angle then!

NOTE: The choice of storing initial angle in magnitude is actually deliberate. The bottom surface elevation can be negative, so we can’t store it in magnitude. Elapsed time is positive, but if we store it in the magnitude, whenever we update influence, since time is reset to 0, it will set the direction to 0 as well. The initial angle does not have this side effect. Therefore, the value that is the most suitable for storing in magnitude is the initial angle.

Writing the Compute Shader

Let’s start by declaring the inputs and outputs.

Influence Info

The buffer containing entity influence info will have 2 float3 and 2 float. We pack them into a InfluenceInfo struct and use a StructuredBuffer to communicate between CPU and GPU. The _InfluenceInfoCount tracks the effective length of the buffer, and is equal to the number of entities registered. We will be allocating a large enough buffer for _InfluenceInfo (e.g. 256 elements), and use the count to delimit valid data range.

struct InfluenceInfo

{

float3 worldPosition;

float radius;

float3 worldVelocity;

float height;

};

// raw influence info data

StructuredBuffer<InfluenceInfo> _InfluenceInfo;

// valid length of influence info

int _InfluenceInfoCount;

NOTE: The order of the fields in

InfluenceInfois important due to alignment requirements. In GPU shaders, buffers must adhere to specific alignment rules, which means types likefloat3may get padded to ensure they fit properly in memory. If you don’t account for this, you may end up allocating more memory than necessary.The struct above, with consecutive

float3andfloatpacked into one 16-byte block, is equivalent to 32 bytes in total. However, if you order your struct like this:struct InfluenceInfo { float3 worldPosition; float3 worldVelocity; float radius; float height; };It will take up 48 bytes (3

float4), because the firstfloat3cannot be packed with the secondfloat3due to alignment rules, and as a result, it gets its own 16-byte block, with 4 bytes unused:

- The first

float3occupies 16 bytes, but the remaining 4 bytes are unused (due to padding).- The second

float3andfloatfields fit into the second 16-byte block without wasting any space.- The final

floatgets its own 16-byte block, with 12 bytes wasted.As a general rule, it’s good practice to ensure that fields in your struct are ordered in such a way that they can fit neatly into 16-byte blocks without any fields “sitting between two blocks.” This will help minimize wasted memory and ensure more efficient data storage.

Texture and Other Constants

We will be using a texture of format R16G16B16A16_SFloat which means 16-bit (half) per channel. Our calculation doesn’t need to be extremely precise so half is enough. You could eventually use integer formats but then you need to write conversion code. For simplicity, we will stick with the float format.

RWTexture2D<half4> _InfluenceTexture;

The layout of a pixel looks like this:

- R: direction vector x component

- G: direction vector y component

- B: the elevation of the bottom surface of the object

- A: elapsed time

Also, don’t forget that the magnitude of the direction vector is the initial angle!

Since we will be doing some conversion between pixels and world space, we need to know how much space does the texture cover, its world space offset, as well as the texture’s size. We will pack all these info into a float4:

/**

* x: 1 px = x units in world space (world size/texture size)

* y: texture px size (square texture)

* z: WS origin.x (origin is the lower left corner)

* w: WS origin.y

*/

float4 _InfluenceTextureParams;

Finally we add the _TimeParams:

/**

* x: Time.deltaTime

*/

float4 _TimeParams;

And that’s all of the ins and outs of the compute shader. Your shader should look like this:

struct InfluenceInfo

{

float3 worldPosition;

float radius;

float3 worldVelocity;

float enttConstant;

};

// raw influence info data

StructuredBuffer<InfluenceInfo> _InfluenceInfo;

// valid length of influence info

int _InfluenceInfoCount;

/** Texture to transcribe influence info to.

* Data layout of the influence texture *

* R: N.x \ initial angle

* G: N.y /

* B: bottom surface elevation

* A: elapsed time since release

*/

RWTexture2D<half4> _InfluenceTexture;

/**

* x: 1 px = x units in world space (world size/texture size)

* y: texture px size (square texture)

* z: WS origin.x (origin is the lower left corner)

* w: WS origin.y

*/

float4 _InfluenceTextureParams;

/**

* x: Time.deltaTime

*/

float4 _TimeParams;

Time Update

Let’s start with the easy one. As said before, we need to update the elapsed time each frame. This is simply adding delta time to the time channel of the influence texture, nothing fancy.

#pragma kernel UpdateTime

[numthreads(8,8,1)]

void UpdateTime(uint3 id: SV_DispatchThreadID)

{

uint2 px = id.xy;

// ensure the pixel is within bounds

if (px.x >= _InfluenceTextureParams.y || px.y >= _InfluenceTextureParams.y) return;

half4 pxData = _InfluenceTexture[px];

half t = pxData.w;

t += _TimeParams.x;

_InfluenceTexture[px] = half4(pxData.xyz, t);

}

We are essentially updating every pixel here, regardless of whether the pixel is in an entity’s influence. This is because we will later transcribe entity influence and that will overwrite relevant pixel’s time.

Entity Influence Transcription

Now we need to take care of the pixels that fall into an entity’s influence.

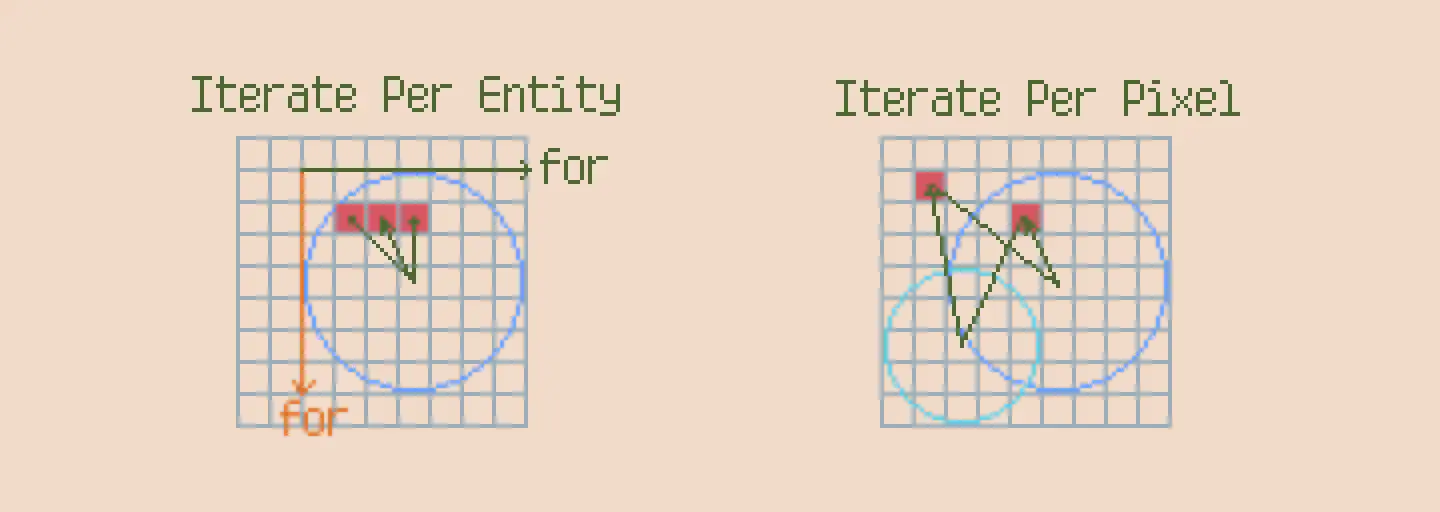

We will implement 2 different methods for Entity Influence Transcription, each with some performance concerns and we need to switch from one to the other depending on the entities.

- Iterate Per Entity: For each compute thread we deal with one entity, calculate the position for every pixel that fall under the influence of that entity, and update them. This method is better when entities are small but numerous.

- Iterate Per Pixel: For each compute thread we deal with one pixel, and we iterate through all the entities. If we find that the pixel falls in the influence of any of them, we update the pixel with corresponding calculations. This method is better when entities are large but not numerous.

The size of the entity depends on its pixel coverage. An entity having a radius 5 units on a texture with 1 px = 1 unit is equivalent to an entity having a radius 1 on a texture with 1 px = 0.2 unit.

Texture Update Logic

Let’s tackle the part in common first: Given a pixel and an entity, does the pixel falls into the entity’s influence?

The logic is simple, since pixel is in texture space, and entity position is in world space, we need to compare them in the same space.

- If we compare in pixel, we need to convert entity’s position and its radius to pixel.

- If we compare in world units, we need to convert pixel’s position to world space.

The latter requires one less conversion so we will compare them in world units:

\[PixelWorldPos = TextureOriginWS + PixelCoord*PixelToWorldRatio\]And if the pixel is in the entity’s influence, then its distance to the entity must be smaller than the entity’s radius:

half2 PixelToWorldPos(in uint2 px){

half ratio = _InfluenceTextureParams.x;

half offset = _InfluenceTextureParams.zw;

return offset + px * ratio;

}

bool IsPixelInInfluence(in half2 pxWorldPos, in InfluenceInfo entity){

return distance(pxWorldPos , entity.worldPosition.xz) < entity.radius;

}

We separate the conversion and the check because later we will also need to use the pixel’s world position. We could convert once and reuse the result in other function calls.

The next question is, if we found a pixel in an entity’s influence, how do we update its color values?

- For A channel, we need to reset the elapsed time to 0 as the entity’s influence will cause the grass to be in a static state.

- For RG (normalized direction), we calculate the displacement from the entity’s center to the pixel, in world space.

- For the B channel, we calculate via: \(elevation = entityY-h_0+h(d)\) for the bottom surface elevation.

- For the magnitude of RG (initial angle), we will simply apply the formula we saw in part 1 of the series to calculate the initial angle.

#define HALF_PI 1.5707963

half EllipticElevation(half x2, half a2, half b)

{

return b *( sqrt(max(0,1 - x2 / a2 )) + 1 );

}

void UpdateInfluence(in uint2 px, in half2 pxWorldPos, in InfluenceInfo entity){

half2 displacement = pxWorldPos - entity.worldPosition.xz;

half d = length(displacement);

half angle = length(originalData.xy);

displacement = SafeNormalize(originalData.xy);

half R2 = (entity.radius * entity.radius);

half h = entity.height;

half d2 = d * d;

// calculate initial angle

half initialAngle = HALF_PI - atan( 2 * entity.height * d / max( R2 - d2, 0.00001);

// calculate elevation

half entityElevation = entity.worldPosition.y - EllipticElevation(d2, R2, h);

_InfluenceTexture[px] = half4(displacement * max(0.01,initialAngle), entityElevation, 0);

}

You might notice that the velocity of the entity is not used. We will be using it in a later chapter to adjust the simulation for moving entities.

NOTE: Strictly speaking, setting time to 0 will actually cause grass blade to “teleport” to the target position. You could try to lerp the time to 0, essentially “reverse” the motion. But that would look particularly weird when the grass is underdamped.

And that’s it! We will now use them in the actual kernels.

Transcription Method 1: Iterate Per Entity

In this method, each thread will process an entity. We are using SV_GroupIndex as it directly gives the index in _InfluenceInfo buffer. Let’s start by writing some boilerplate code:

#pragma kernel TranscribeMethod1

[numthreads(8,8,1)]

void TranscribeMethod1(uint id: SV_GroupIndex)

{

if (id >= _InfluenceInfoCount) return;

InfluenceInfo info = _InfluenceInfo[id];

//...

}

In the next step, we need to determine which pixels are in the entity’s influence. To do this, we will convert the entity’s position and radius into pixels.

#pragma kernel TranscribeMethod1

[numthreads(8,8,1)]

void TranscribeMethod1(uint id: SV_GroupIndex)

{

if (id >= _InfluenceInfoCount) return;

InfluenceInfo info = _InfluenceInfo[id];

half radius = info.radius;

int pxRadius = radius / _InfluenceTextureParams.x;

uint2 infoPxPos = (info.worldPosition.xz - _InfluenceTextureParams.zw) / _InfluenceTextureParams.x;

for (int x = -pxRadius; x <= pxRadius; x++)

{

for (int y = -pxRadius; y <= pxRadius; y++)

{

int2 px = infoPxPos + int2(x, y);

//...

}

}

}

The two for loops cover a square instead of a circle, so we still need to check if a pixel is in the circle by calling IsPixelInInfluence. After that, if we find the pixel is indeed in influence, we call UpdateInfluence.

![]()

#pragma kernel TranscribeMethod1

[numthreads(8,8,1)]

void TranscribeMethod1(uint id: SV_GroupIndex)

{

if (id >= _InfluenceInfoCount) return;

InfluenceInfo info = _InfluenceInfo[id];

half radius = info.radius;

int pxRadius = radius / _InfluenceTextureParams.x;

uint2 infoPxPos = (info.worldPosition.xz - _InfluenceTextureParams.zw) / _InfluenceTextureParams.x;

for (int x = -pxRadius; x <= pxRadius; x++)

{

for (int y = -pxRadius; y <= pxRadius; y++)

{

int2 px = infoPxPos + int2(x, y);

half2 thisPxWsPos = PixelToWorldPos(px);

if (IsPixelInInfluence(thisPxWsPos, info))

{

UpdateInfluence(px, thisPxWsPos, info);

}

}

}

}

Transcription Method 2: Iterate Per Pixel

The idea is similar, but this time we simply go through all the pixels in the texture and check if it is in any of the entity’s influence. We are using SV_DispatchThreadID which gives us pixel coordinates.

#pragma kernel TranscribeMethod2

[numthreads(8,8,1)]

void TranscribeMethod2(uint3 id: SV_DispatchThreadID)

{

uint2 px = id.xy;

if (px.x >= _InfluenceTextureParams.y || px.y >= _InfluenceTextureParams.y) return;

// Iterate over each entity

for (int i = 0; i < _InfluenceInfoCount; i++)

{

InfluenceInfo info = _InfluenceInfo[i];

half2 thisPxWsPos = PixelToWorldPos(px);

if (IsPixelInInfluence(thisPxWsPos, info))

{

UpdateInfluence(px, thisPxWsPos, info);

return;

}

}

}

And that concludes our compute shader (for now).

In the next part, we will setup the CPU side of the entity influence system.